Frontend Observability: See what's actually breaking for your users

What is frontend observability?

Frontend observability is the practice of instrumenting your web application to collect, correlate, and analyse data about how it performs and behaves in production with real users. Unlike traditional monitoring which tells you what is happening, observability helps you understand why it's happening.

At its core, frontend observability combines three pillars of data:

- Metrics - Quantitative measurements like Core Web Vitals, page load times, and resource timings

- Traces - The journey of a user interaction through your application, from click to render

- Logs - Discrete events, errors, and contextual information about the user's environment

The power comes from correlation. When you can filter metrics by device type, slice traces by network speed, or compare error rates across browsers and regions, you stop guessing and start finding root causes.

Why frontend observability matters

Modern web applications are complex distributed systems. Your frontend code runs in thousands of different environments - various browsers, devices, network conditions, and geographical locations. What works perfectly in your development environment might fail for users on slow 4G connections or older devices.

The visibility gap between backend and frontend

A critical challenge facing development teams is the disconnect between backend and frontend monitoring. You could have a perfectly running backend whilst your users struggle with slow page loads, JavaScript errors, or unresponsive interfaces. Your API response times might be excellent, but third-party scripts, slow image loading, or client-side rendering bottlenecks can still destroy the user experience.

Frontend observability bridges this gap by providing visibility into what actually happens in the browser, across diverse devices, browsers, and geographic locations - each with unique performance characteristics and potential failure modes.

The browser as your system's nervous system

Modern web applications are distributed systems. When a single button click can fan out into five services, two CDNs, and a third-party script, the browser is no longer a thin presentation layer - it's part of your system's nervous system. Single-page applications maintain state across distributed components, execute business logic directly in the browser, and integrate with dozens of services.

This complexity creates debugging challenges. Issues that appear random - "the interface freezes sometimes" - might only occur on specific browser versions, device types, or network conditions. Without proper observability, these problems remain invisible until they significantly impact users.

The cost of poor frontend performance

Performance is a feature, not an afterthought. Research consistently shows that frontend performance directly impacts business metrics:

- 67% of businesses report lost revenue due to poor website performance

- 53% of mobile users abandon sites that take longer than 3 seconds to load

- Every 100ms improvement in load time can increase conversion rates by 1-2%

- Poor Core Web Vitals scores correlate with higher bounce rates and lower engagement

- Performance issues often affect specific user segments, making them invisible in aggregate metrics

Without observability, you're flying blind. You might know your average page load time, but you don't know:

- Which user segments experience the worst performance

- What specific resources or third-party scripts cause slowdowns

- How performance varies across different user journeys

- Why some pages perform well whilst others struggle

- The business impact of performance issues on conversion and revenue

From reactive to proactive

Traditional monitoring is reactive - you discover problems after users complain or metrics spike. Frontend observability enables proactive performance management by:

- Detecting issues before users notice - Set alerts on key frontend metrics to identify slowdowns or failures before most users are even aware of the problem

- Reducing MTTD and MTTR - Mean time to detect and mean time to resolve improve dramatically when you have full context and distributed traces showing the path to failure

- Understanding user impact - Correlate performance with business metrics to prioritise fixes based on actual revenue impact

- Validating optimisations - Measure the real-world impact of performance improvements across user segments

- Building user trust - Reliability and performance build confidence; every fast load and seamless interaction reinforces trust in your product

This shift from reactive firefighting to proactive problem-solving enables teams to deliver resilient, high-performing digital experiences that users rely on.

Key components of frontend observability

Who benefits from frontend observability?

Frontend observability serves multiple roles across your organisation:

- Frontend engineers - Optimise performance and reliability, debug production issues with full context

- SRE teams - Gain end-to-end visibility from backend services to actual user impact

- Product teams - Analyse real-world user behaviour patterns and feature engagement

- Performance engineers - Target load optimisation based on actual user experience data

- Business stakeholders - See performance data that can be correlated with conversion and revenue metrics

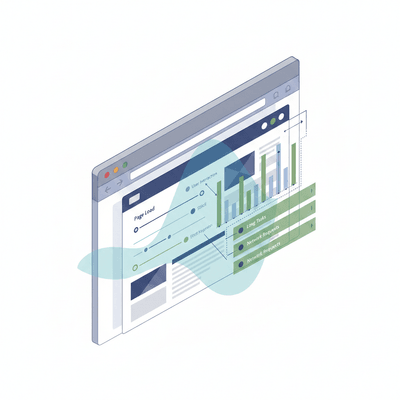

1. Real User Monitoring (RUM)

RUM collects performance metrics from actual users as they interact with your site, capturing authentic experiences across different devices, browsers, and network conditions - revealing issues that are otherwise difficult to surface through synthetic testing. This includes:

- Navigation timing - DNS lookup, connection time, response time, DOM processing

- Core Web Vitals - LCP, INP, CLS measured in real user conditions

- Resource timing - Load times for images, scripts, stylesheets, and third-party resources

- Device metadata and geolocation - Understand performance variations by geography and device type

- Network information - Connection type, effective bandwidth, latency characteristics

- Custom metrics - Application-specific timings like "time to interactive cart" or "checkout flow duration"

Geographic mapping capabilities allow you to isolate regional outages or traffic anomalies, whilst dimensional slicing by operating system, browser, and device reveals performance patterns that aggregate metrics mask.

2. Error tracking and debugging

Comprehensive error tracking goes beyond simple console.log() statements. Rather than messages in a bottle, modern error tracking provides structured, client-side event logging that captures not only the error but the complete context surrounding it:

- Stack traces - Source-mapped to your original code, not minified production code

- User context - What the user was doing when the error occurred (page, component, recent interactions)

- Environment data - Browser, device, OS, network conditions

- Application state - Key user interactions and state changes leading to the error

- Custom context - User ID, feature flags, A/B test variants, customer segment

The goal is to reconstruct the sequence of events that led to a problem, moving beyond a simple stack trace to understand what went wrong and why.

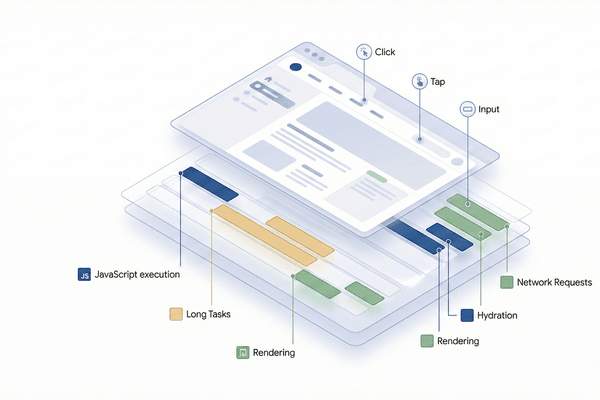

3. Distributed tracing

Distributed tracing follows a user interaction through your entire stack - from the click in the browser, through API calls, to backend services, and back to the rendered result. Using correlation IDs (often via OpenTelemetry trace context propagation), you can track individual requests across system boundaries, revealing the complete story of a user action.

Transaction-level drill-downs with waterfall diagrams visualise the loading sequence and identify blocking resources. This reveals:

- Which part of your stack contributes most to latency—frontend, network, or backend

- How frontend performance correlates with backend response times

- Where bottlenecks occur in complex multi-service architectures

- The impact of third-party services on user experience

- Whether delays originate in database queries, API gateways, or client-side rendering

This end-to-end visibility is crucial because traditional monitoring might show "all green" on the backend whilst users experience slow page loads due to client-side bottlenecks or network issues. By correlating frontend metrics (Core Web Vitals, error rates) with backend traces and logs, you can diagnose issues that span multiple system layers.

4. Interaction and navigation tracking

Observability tools track how users move through your application and where they experience friction:

- Page transitions and route changes via Navigation Timing API

- Interaction timing for INP attribution (which elements are slow to respond)

- Breadcrumbs showing the sequence of events leading up to errors

- User flows through multi-step processes like checkout

5. Custom instrumentation

Beyond standard metrics, observability platforms allow you to track business-specific events and timings:

- Custom user journeys (e.g., "add to cart" to "checkout completion")

- Feature usage and engagement metrics

- A/B test variant performance

- Business events correlated with performance data

6. Full frontend-to-backend observability

The most powerful observability implementations connect frontend and backend telemetry into a unified system. This end-to-end visibility means you can follow a single user interaction from the browser click, through your entire backend infrastructure, and back to the rendered response.

What full-stack observability enables:

- Complete request tracing - Follow a transaction from browser → API gateway → microservices → database → cache → response → render, all in one trace

- Unified correlation - Connect frontend errors with backend logs, relating what the user experienced to what happened in your services

- Holistic performance analysis - Understand whether slowdowns originate in network latency, backend processing, database queries, or client-side rendering

- Cross-team collaboration - Frontend and backend engineers share the same observability data, breaking down silos

- Root cause identification - Pinpoint whether issues originate in frontend code, network, API responses, or third-party services

Implementation approaches:

Full-stack observability typically requires:

- Unified instrumentation - Using standards like OpenTelemetry to instrument both frontend (browser) and backend (services, databases) with compatible trace formats

- Trace context propagation - Passing correlation IDs and trace context from browser requests through HTTP headers to backend services

- Centralized data platform - Aggregating telemetry from all layers (frontend, backend, infrastructure) in a single observability platform for correlation and analysis

- Consistent metadata - Tagging traces with consistent attributes (user ID, session ID, release version) across frontend and backend

While implementing full-stack observability requires coordination between teams and tooling, the payoff is significant: you gain a complete picture of your system's behaviour from the user's perspective, making it dramatically easier to diagnose complex issues that span multiple layers of your architecture.

Frontend observability vs traditional monitoring

| Aspect | Traditional Monitoring | Frontend Observability |

|---|---|---|

| Data source | Synthetic tests, basic RUM metrics, server logs, uptime checks | Real user interactions with full context, client-side instrumentation, distributed traces |

| Questions answered | "Is the site up?" "What's the average load time?" | "Why is LCP slow for mobile users in Germany?" "Which third-party script causes high INP?" |

| Data granularity | Aggregated metrics, percentiles | Individual user sessions with full context |

| Debugging capability | Limited - shows symptoms, not causes | Comprehensive - traces issues to root cause |

| User segmentation | Minimal - usually just geography | Extensive - device, browser, network, user journey, feature flags |

| Alerting | Threshold-based alerts on aggregated metrics | Anomaly detection with context about affected users and sessions |

Frontend observability and Core Web Vitals

Core Web Vitals measure user experience through loading speed, visual stability, and interaction responsiveness. Traditional monitoring tells you that your scores are poor. Frontend observability tells you why and for whom.

Here's what observability platforms reveal that basic Core Web Vitals reporting cannot:

- Root cause attribution - Trace poor metric scores to specific resources, third-party scripts, or code paths

- User segmentation - Discover that LCP is only slow for mobile users in Asia, or INP only fails on older devices

- Element-level insights - Identify exactly which element causes your LCP or which DOM elements trigger layout shifts

- Journey correlation - Understand how performance affects specific user flows (checkout, search, product pages)

- Trend analysis - Track how Core Web Vitals change over time and catch regressions before they affect many users

For detailed optimisation strategies for each metric, see our guides on LCP, INP, and CLS.

Tools and platforms

The frontend observability landscape includes both commercial platforms and open-source solutions. Here's an overview of leading options:

Commercial observability platforms

1. Honeycomb

Honeycomb is a full-stack observability platform with strong frontend capabilities. It excels at:

- High-cardinality data analysis - query across any dimension without pre-aggregation

- Distributed tracing from frontend to backend

- BubbleUp feature for automatic anomaly detection

- Custom instrumentation with flexible event data

Best for: Engineering teams wanting deep insights and custom instrumentation

2. Datadog RUM

Part of the broader Datadog monitoring platform:

- Unified observability across frontend, backend, and infrastructure

- Session replay and user journey analysis

- Synthetic monitoring integration

- Extensive third-party integrations

Best for: Organisations already using Datadog for infrastructure monitoring

3. Dynatrace

AI-powered observability with automatic instrumentation:

- Automatic real user monitoring without manual instrumentation

- Session replay with performance correlation

- AI-driven root cause analysis (Davis AI)

- Full-stack distributed tracing from browser to backend

Best for: Enterprise teams wanting automated observability with AI insights

Open-source solutions

Grafana Faro

Open-source frontend observability from the Grafana team:

- Real user monitoring with Web Vitals

- Error tracking and session metadata

- Integration with Grafana ecosystem (Tempo, Loki, Prometheus)

- Self-hosted or Grafana Cloud options

Best for: Teams already using Grafana with self-hosting requirements

OpenTelemetry + Custom Backend

For complete control, combine OpenTelemetry instrumentation with your own backend:

- Vendor-neutral instrumentation standard

- Send data to any backend (Jaeger, Tempo, custom solution)

- Complete data ownership and privacy

- Requires significant engineering investment

Best for: Large engineering organisations with specific requirements and resources

Choosing the right tool

Consider these factors when selecting a frontend observability platform:

- Primary use case - Error tracking, performance monitoring, or comprehensive observability?

- Team size and expertise - Some tools require more technical knowledge to use effectively

- Existing infrastructure - Integration with current monitoring and development tools

- Budget - Pricing varies widely from open-source (free) to enterprise platforms

- Data privacy requirements - Some industries require self-hosted solutions

- Traffic volume - Costs scale with data ingestion; consider sampling strategies

Implementing frontend observability

Successfully implementing frontend observability requires careful planning and execution. Here's a practical approach:

Phase 1: Foundation (Week 1-2)

1. Define your objectives

Start by identifying what you want to achieve:

- Improve Core Web Vitals scores for SEO and user experience

- Reduce JavaScript errors affecting user journeys

- Understand performance across different user segments

- Correlate performance with business metrics (conversion, revenue)

- Debug complex frontend issues faster

2. Choose your platform

Based on your objectives, team expertise, and budget, select an observability platform. Consider starting with a trial to validate fit.

3. Implement basic instrumentation

Most platforms provide an SDK or JavaScript snippet for basic instrumentation:

Example: Initialising a frontend observability SDK

import { init } from '@observability-vendor/browser';

init({

serviceName: 'my-web-app',

environment: 'production',

// Performance monitoring

enableRUM: true,

enableWebVitals: true,

// Error tracking

enableErrorTracking: true,

// Sampling configuration

sampleRate: 1.0, // 100% for initial setup, reduce after validation

// User privacy

maskAllText: false,

maskAllInputs: true,

});Phase 2: Custom instrumentation (Week 3-4)

4. Add custom metrics

Instrument business-critical user journeys and interactions:

Example: Track custom user journey timing

import { measure } from '@observability-vendor/browser';

// Start timer when user adds item to cart

const addToCartTimer = measure.start('add-to-cart-flow');

// ... user completes action ...

// End timer and send metric

addToCartTimer.end({

productId: product.id,

category: product.category,

userType: user.isPremium ? 'premium' : 'standard'

});5. Implement distributed tracing

Connect frontend traces to backend services for end-to-end visibility:

Example: Propagate trace context to backend

import { trace } from '@observability-vendor/browser';

const response = await fetch('/api/checkout', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

...trace.getTraceHeaders(), // Propagate trace context

},

body: JSON.stringify(orderData),

});6. Add contextual data

Enrich events with user and session context:

Example: Set user and session context

import { setUser, setContext } from '@observability-vendor/browser';

setUser({

id: user.id,

email: user.email, // Be mindful of PII regulations

plan: user.subscriptionPlan,

});

setContext({

experimentVariant: abTestVariant,

featureFlags: enabledFeatures,

appVersion: APP_VERSION,

});Phase 3: Optimisation (Week 5-6)

7. Configure sampling

For high-traffic sites, implement sampling to control costs whilst maintaining statistical significance:

Example: Adaptive sampling strategy

const getSampleRate = () => {

// Sample 100% of errors

if (hasError) return 1.0;

// Sample 100% of poor Core Web Vitals

if (webVitalsScore < 'good') return 1.0;

// Sample 10% of normal traffic

return 0.1;

};8. Set up alerts and dashboards

Create dashboards for key metrics and configure alerts for regressions:

- Core Web Vitals dashboard - LCP, INP, CLS trends by device and geography

- Error rate dashboard - JavaScript errors, network failures, segmented by release

- Business metrics dashboard - Conversion rates correlated with performance

- Alerts - Notify team when metrics degrade beyond thresholds

9. Integrate with development workflow

Make observability data actionable by integrating with your development process:

- Link error tracking to issue management (Jira, Linear, GitHub Issues)

- Add performance budgets to CI/CD pipeline

- Include observability links in deployment notifications

- Review observability data in sprint retrospectives

Phase 4: Continuous improvement (Ongoing)

10. Regular analysis and optimisation

Establish a rhythm for analysing observability data and acting on insights:

- Weekly - Review error trends and critical performance regressions

- Monthly - Deep-dive analysis on specific user segments or journeys

- Quarterly - Evaluate observability tool effectiveness and coverage

- Per release - Compare performance before and after deployments

Best practices

1. Start with clear objectives

Don't instrument everything without purpose. Define specific questions you want to answer:

- "Why is our checkout flow abandonment higher on mobile?"

- "Which third-party scripts impact Core Web Vitals most?"

- "How does performance vary by geographical region?"

2. Respect user privacy

Frontend observability involves collecting user data. Implement privacy best practices:

- Mask sensitive data - Credit card numbers, passwords, personal information

- Comply with regulations - GDPR, CCPA, and other privacy laws

- Provide opt-out mechanisms - Allow users to disable tracking

- Review session replays - Ensure they don't capture private information

- Document data collection - Be transparent in your privacy policy

3. Optimise instrumentation overhead

Observability tools shouldn't degrade the performance they're measuring:

- Lazy load SDKs - Don't block initial page render

- Use asynchronous APIs - Send data without blocking user interactions

- Implement sampling - Balance data coverage with performance impact

- Monitor the monitor - Track the overhead of your observability tool itself

4. Correlate frontend and backend observability

The most powerful insights come from connecting frontend and backend data:

- Use distributed tracing to follow requests end-to-end

- Correlate frontend errors with backend logs

- Understand how API latency affects user experience

- Identify whether issues originate in frontend code or backend services

5. Segment your data

Aggregate metrics hide important variations. Always analyse data by relevant segments:

- Device type - Mobile, tablet, desktop

- Network condition - 5G, 4G, WiFi

- Geography - Country, region, city

- Browser - Chrome, Safari, Firefox, Edge

- User journey - First-time visitors vs returning users

- Customer type - Free users vs paid subscribers

6. Create actionable alerts

Alert fatigue is real. Design alerts that are specific, actionable, and important:

- Use meaningful thresholds - Alert when business impact is significant

- Include context - Alert messages should contain enough information to triage

- Reduce noise - Group similar alerts, use intelligent deduplication

- Define ownership - Ensure alerts reach the team that can act on them

7. Make observability data accessible

Observability isn't just for engineers. Make data accessible to your entire organisation:

- Executive dashboards - High-level metrics tied to business outcomes

- Product manager views - Feature usage and performance correlated with engagement

- Marketing insights - How landing page performance affects conversion rates

- Support team access - Context for debugging customer-reported issues

8. Version your instrumentation

As your application evolves, your instrumentation needs to evolve too:

- Track instrumentation version alongside application version

- Review and update custom metrics regularly

- Remove obsolete instrumentation to reduce overhead

- Document what each custom metric measures and why

Get started with frontend observability

Ready to implement frontend observability? Here's your action plan:

- Define your objectives - What questions do you need to answer? What problems do you need to solve?

- Start with RUM and Core Web Vitals - Get baseline measurements of real user performance

- Choose a platform - Evaluate tools based on your needs, expertise, and budget

- Implement basic instrumentation - Get the SDK running in production with standard metrics

- Add custom instrumentation - Track business-specific journeys and interactions

- Create dashboards and alerts - Make data visible and actionable for your team

- Act on insights - Use observability data to drive performance improvements

- Measure impact - Track how optimisations affect both performance and business metrics

Need help implementing frontend observability or optimising your Core Web Vitals? Contact our web performance consultants for expert guidance and implementation support.

Related resources

- Core Web Vitals Guide - Deep dive into LCP, INP, and CLS

- UX Score - How we quantify web performance impact

- Optimise Largest Contentful Paint (LCP)

- Improve Interaction to Next Paint (INP)

- Fix Cumulative Layout Shift (CLS)

Frequently asked questions

What's the difference between frontend observability and Real User Monitoring (RUM)?

RUM is a subset of frontend observability. RUM focuses specifically on collecting performance metrics from real users, whilst frontend observability encompasses a broader approach including distributed tracing, error tracking, and contextual data correlation. Frontend observability provides deeper insights into why issues occur, not just what is happening.

Do I need frontend observability if I already use synthetic monitoring?

Yes. Synthetic monitoring tests your site from controlled environments with predefined scripts, which is valuable for catching regressions. However, it cannot capture the full variety of real user experiences, devices, network conditions, and user journeys. Frontend observability shows you what actually happens in production with real users.

How does frontend observability help with Core Web Vitals?

Frontend observability platforms collect Core Web Vitals metrics (LCP, INP, CLS) from real users and provide context about why scores are poor. You can trace a slow LCP back to specific resources, identify which interactions cause high INP, and see which elements trigger layout shifts—all with real user data rather than lab conditions.

What's the performance impact of adding observability tools?

Modern observability tools are designed with minimal performance overhead, using asynchronous collection methods and modular architectures that allow tree-shaking unused features. The key is to use sampling for high-traffic sites, lazy-load the SDK after initial page render, and ensure the observability library itself doesn't degrade the experience you're trying to measure.

What tools are commonly used for frontend observability?

Popular options include Honeycomb and Grafana for general observability, Sentry for error tracking, and RUM-focused tools like SpeedCurve, RUMvision, and DebugBear for performance monitoring. OpenTelemetry provides a vendor-neutral standard for instrumentation that works across platforms.

Need help implementing these optimisations?

We can audit your site and create a custom performance improvement plan.